- #PCA DATA IFORMAT IN R HOW TO#

- #PCA DATA IFORMAT IN R INSTALL#

- #PCA DATA IFORMAT IN R ZIP#

- #PCA DATA IFORMAT IN R DOWNLOAD#

heatmap ( loadings_df, annot = True, cmap = 'Spectral' ) plt. Import seaborn as sns import matplotlib.pyplot as plt ax = sns. # get correlation matrix plot for loadings # correlation of the variables with the PCs. set_index ( 'variable' ) loadings_df # output

#PCA DATA IFORMAT IN R ZIP#

from_dict ( dict ( zip ( pc_list, loadings ))) loadings_df = df. # the squared loadings within the PCs always sums to 1 # component loadings represents the elements of the eigenvector explained_variance_ratio_ ) # outputĪrray () # component loadings or weights (correlation coefficient between original variables and the component)

explained_variance_ratio_ # outputĪrray () # Cumulative proportion of variance (from PC1 to PC6) # Proportion of Variance (from PC1 to PC6) fit ( df_st ) # get the component variance

#PCA DATA IFORMAT IN R HOW TO#

Learn how to import data usingįrom composition import PCA from sklearn.preprocessing import StandardScaler from bioinfokit.analys import get_data import numpy as np import pandas as pd # load dataset as pandas dataframeĭf = get_data ( 'gexp' ). Note: If you have your own dataset, you should import it as pandas dataframe.

#PCA DATA IFORMAT IN R DOWNLOAD#

#PCA DATA IFORMAT IN R INSTALL#

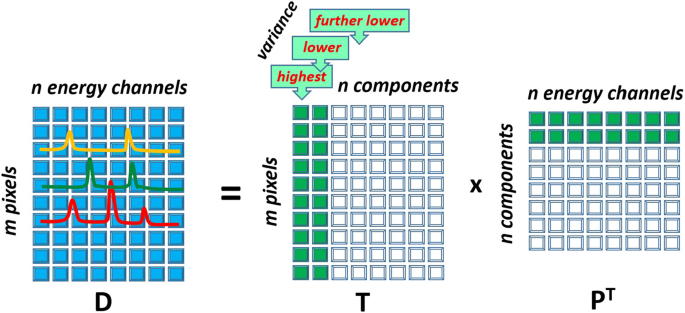

we will use sklearn, seaborn, and bioinfokit (v2.0.2 or later) packagesįor PCA and visualization (check how to install Python packages).These top first 2 or 3 PCs can be plotted easily and summarize and the features of all original 10 variables. (generally first 3 PCs but can be more) contribute most of the variance present in the the original high-dimensionalĭataset. PCs are ordered which means that the first few PCs Variables (PCs) with top PCs having the highest variation. (you may have to do 45 pairwise comparisons to interpret dataset effectively). For example, when datasets contain 10 variables (10D), it is arduous to visualize them at the same time.PCA works better in revealing linear patterns in high-dimensional data but has limitations with the nonlinear dataset.PCA preserves the global data structure by forming well-separated clusters but can fail to preserve the.PCA helps to assess which original samples are similar and different from each other.

Most of the variation, which is easy to visualize and summarise the feature of original high-dimensional datasets in

0 kommentar(er)

0 kommentar(er)